With increasing demands on the veterinary profession to provide an answer to customers' questions, it is common for us to think: ‘I wish I knew what the science is to back up what I'm going to advise’. This is so common, it has an acronym: IWIK or, ‘I wish I knew’. At this point, the practitioner has a choice to find an internet search engine or professional social community as a digital source or reach for a book, ask a colleague and go to CPD notes. In reality, this may be a combination of both (Leung, 2013; Cosco, 2015). Before the internet, our clients paid for the knowledge base from our training as well as our experience in practice but today, many have already looked through social media or the web for a diagnosis and advice on solutions before they make an appointment (Van Riel, 2017). This has created a shift in the expectations and requirements in the application of our knowledge. It is more suitable for us as a profession to guide our clients through the mass of information available in the public domain to what is likely to be in the best interest for each case. For this, we need to develop a different skill set: that of searching quickly for the best scientific evidence to then apply our judgement in order to advise on a least-risk intervention for the case.

Where to start?

Try and determine what the impact (or value proposition) of the answer you are searching for might be (have the end in mind):

- Is this going to simply be an academic exercise to further your knowledge? It is likely that if you asked this question, others want to know too, so the educational value will doubtless be high.

- Is this going to change how the practice policies or standard operating procedures are built?

- If this is the case, will this affect costs to the practice over time? Will it increase client footfall? Will it increase product sales? Will it affect farm business income?

- Will it affect efficiencies and therefore ease the workload and affect job satisfaction/personnel retention?

- How will you assess the VALUE made from this answer?

In the case of the value to be obtained, it would be advisable to determine what is measurable from the likely results of your intervention. If this is clearly defined, you can make a more reliable judgement whether or not the investment of time and effort spent in finding the answer is sustainable.

Searching for evidence

Having an aligned and consistent system across searches will make it a lot easier to answer other questions in the future and align to other people's searches also. When you have a clear and defined question, you can then begin to build a search of the science on key words from the text. The process of searching in evidence-based medicine begins with a PICO question: patient (or population in farm animals), intervention, comparison and outcome.

Asking questions takes time…

Asking the right combination of keywords is probably the most difficult part of the evidence-finding process and takes experience to make the process as easy as possible. Consider a typical example of a starting question: ‘What is the best treatment for dry period origin mastitis?’

This sounds simple but if placed directly into a search engine, a huge variety of hits return: university information sites, advisory services and companies trying to sell their product all appear in the results. There are too many undefined variables in the question: the species is not included; ‘best’ is very subjective; treatment is not well defined as antimicrobials, sealants, dips, procedures, homeopathics, topicals etc. will all be included in the hits. Mastitis is well defined at ‘dry period origin’, but it is unclear if this is early lactation, first case, acute or subclinical.

So we need to have a much more focused way of asking specific questions:

- Patient group (or population), in our case, this is species or even breed dependent also

- Intervention — this is ANYTHING you might do in order to address the question: it could be antimicrobials, applying a diagnostic test or prevention strategy or indeed, anything other than nothing

- Comparison — usually, it is desirable to compare one intervention to something else. This may be treatment versus nothing; treatment vs another treatment or one test vs. another.

- Outcome — the defined outcome is the most variable of all parts of the PICO and should link clearly with the value proposition from the start. When/if the treatment, strategy or test is chosen, what is the result you are looking for in order to create the most value from the search?

Considering the dry cow mastitis question: the PICO may look something like this:

- Population — dairy cows in early lactation

- Intervention — teat sealants

- Comparison — dry cow therapy (antibiotic/antimicrobial intramammary treatment tubes)

- Outcome — the result of the intervention may differ in many ways: which is going to bring the most value to the question's NEED in the first place? What do you want to measure against the intervention?

- Incidence or prevalence of mastitis? Resulting hits of papers will likely be retrospective accounts of data or trials. Something that lowers incidence will be able to be evaluated in terms of direct economic return: more milk, less culls, better genetics

- Efficacy (papers will mainly be trials): this might be better for a question of which antimicrobial to choose (which is cheaper and has the same or better effect)

- Effect on reproduction? Likely to be another retrospective view of data: more economic return to be made, but this will require more refined definitions of outcome: first service conception rate? 21 day pregnancy rate? Days open? Herd Turnover?

- Effect on the immune system? Likely to be reviews: this is a theoretical outcome, so has academic value but will be hard to see direct economic return from this question, as you can not directly link immunity changes to clinical disease, only risks of disease.

PICO: our example

Population, intervention, comparison, outcome: ‘In (dairy cows with early lactation mastitis) do (teat sealants) or (dry cow therapy) result in (lower incidence rates of clinical mastitis in the first 30 days in milk)?’

We now have some TEXT to enter into a search engine to view the science behind this very specific question.

We know from our value proposition, that when we have looked through this search, we are likely to come across a conclusion that may result in higher income on farm, due to lower incidence rates, increased milk shipped from the farm (less dumped milk), lower cull rates, possibly higher milk volumes from lactation curve effects. This can add specific value to the farm business and your own advice services.

Which search engine to choose?

Scientific databases are the best sources, but can require extremely expensive subscriptions for general practitioners or personal budgets. However, many give access to the abstracts of papers (summaries) which can sometimes give enough information to get a good and reliable result. For instance, an abstract that matches your search criteria and gives numbers in the population tested, results with p-values and confidence intervals would be sufficient to make a reasonable conclusion. An abstract that simply gives a summary of the paper's outline is not so useful: without group numbers and appraisal of values, it is hard to make conclusions. So ‘full access’ or ‘open access’ is preferable when it comes to articles.

The options

- GOOGLE or equivalent search engine: very variable and too much information, much of it un-validated by scientific review

- GOOGLE SCHOLAR: filters out scientific literature and is often a very good source but Google do not publish which journals it searches, so we do not know whether this is all the information available, or just Google's selection. In personal experience, it is a very good database and worth searching but often gives too many hits to search every page and is not as specific as others

- PUBMED/NCBI: very good for scientific literature and for building search parameters BUT it does not include all agricultural or veterinary papers

- SCIENCE DIRECT/OVID: a very good source, but restricted to those with subscriptions (institutions, hospitals)

- CABI: a good source for veterinary/agricultural research but again, restricted to subscriptions

- Evidence-based journals: these are previous evidence-based searches and peer-reviewed and are the most practical for inpractice use. Very few of these exist in the veterinary world but some are emerging: RCVS Knowledge Summaries, BestBETs, Centre for Evidence-Based Veterinary Medicine

Searching tips

Some techniques make the act of searching more efficient and quicker. Boolean Operands are ways of using selected text and combining it with specific operative words, which are written in capital letters in the keyword search boxes of search engines:

- AND narrows a search: ‘dairy’ AND ‘cow’ will ONLY look at papers containing the words ‘dairy’ and ‘cow’

- OR broadens a search: ‘dairy’ OR ‘cow’ will look at all papers containing the words ‘dairy’ and all papers that contain ‘cow’.

- NOT excludes terms: ‘dairy’ NOT ‘cow’ will exclude all papers about cows and concentrate on other aspects of the word ‘dairy’ (maybe products, business etc.).

Some characters can broaden keywords:

- Asterisk: this can add ANY ending to your word's start: bovi* will search for boviNE, boviD, boviDAE, etc.

- $ or ? can both be ANY single character: e.g. hyper$keton$emia can be hyper-ketonaemia, hyperketonemia, hyperketonaemia etc.

- ( ) or [ ] can both include a combination of words that are only meaningful TOGETHER in your search: [sub$clinical mastitis] [dry cow therapy].

In many databases, it is possible to use the ‘advanced’ search boxes, allowing keywords or combinations to be added in separate areas. If all of the PICO is added into one box, the resulting hits are usually extremely small or even zero. However, if parts are added into separate boxes and results saved, you can swap and change them for other searches. For example, dairy AND cow AND [dry period mastitis] AND [teat sealant] AND antimicrob* AND [incidence OR prevalence] AND economic* may result in zero hits. But if entered separately, you can then add or take away parts of the search to refine the resulting hits.

Success may be deemed as the resulting hits providing sufficient support to answer your PICO that is practical to look at (>0 and less than, say 80 papers). More papers may require a filter: perhaps reviews only, randomised control trials only or papers since 2000. Most search databases provide similar filters on the site.

Appraisal of the evidence

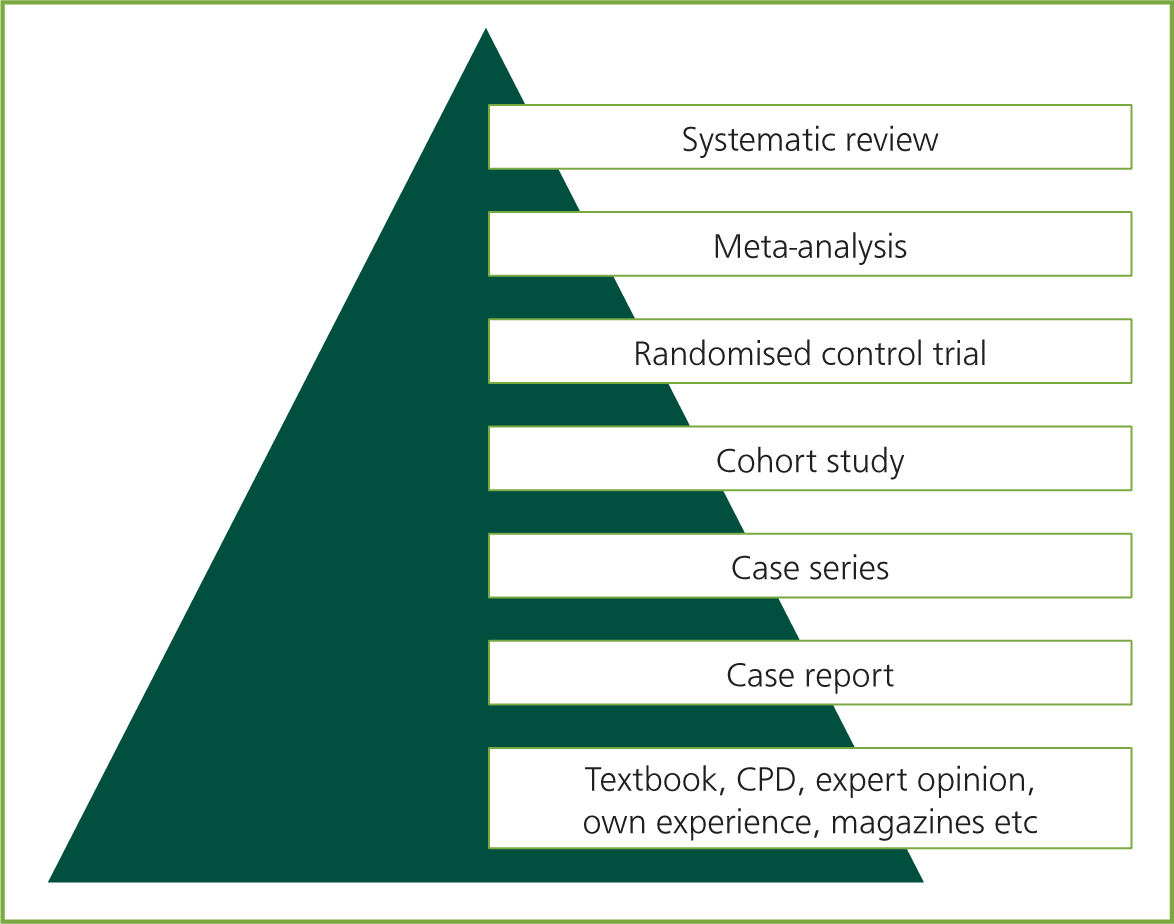

Some scientific resources are of better quality than others. In evidence-based medicine, there are defined LEVELS OF EVIDENCE. This sometimes requires a shift in mindset compared with our previous expectations, especially in terms of continuing professional development (CPD) and textbooks (Manchikanti, 2007).

In terms of peer-reviewed literature, there are defined levels of evidence. Think of them in a triangle (see Figure 1).

Defining each of these is without the scope of this article but can easily be guided on sites such as RCVS Knowledge Evidence Toolkit or OCEBM. Some over-riding principles apply to all papers. Ensure, when appraising the literature, that you consider all of the following: number of animals included in trials; p-values and confidence intervals of results; clearly defined methodology; and treatment of the statistical findings. This is why access to the full paper is often more useful than the abstract. The published conclusions may be misleading: many times in marketing, have articles been quoted that concentrate on the number of animals recovering from an intervention, ignoring the fact that the difference between those and control groups was not significant! Your appraisal should also include the limitations of each paper.

Clinical bottom line

Once your question has been posed to the search databases and the resulting papers have been appraised, you may begin to make a conclusion based on your findings. At this point, it is OK to come to an inconclusive opinion! It is often the case in veterinary searches, that there are insufficient data or evidence to make a strong conclusion, but this only supports the need for more focused research efforts, based on questions generated from practice. Nevertheless, you may consider that the evidence either supports or refutes your intervention or chosen test. This can be summarised in a short paragraph and is termed the ‘clinical bottom line’ (preferably something that can be read by a colleague in a very short time to support their thoughts during a consultation for instance).

Application and limitations of evidence-based medicine

Your search and appraisal can be used to support your case-generated PICO but it is of little use to a wider veterinary community until it is shared, and preferably, reviewed. On a local basis, it is recommended to have regular practice meetings where these searches can be discussed between your colleagues and used for other cases. There are some e-journal sites that are set up to help record evidence searches, review them through a peer-review process and publish them for a wider community. Science, however, moves on, so searches can become out of date. This is where the benefit of saving the search string is useful: at a future time, another person can cut-and-paste the string into databases and review only the papers published since the last search, hence keeping the information up to date and saving time on reviewing all articles again.

The greatest limitation of reviewing literature is the consideration of publication bias: only reviewing journals may skew conclusions, as publication is often supported by grants or company funding and is more likely to be published if the results are positive. This should be considered when you are appraising the literature as a whole and before constructing the clinical bottom line.

It is also important that your appraisal and conclusion is to be taken as a guide when considering its application to your case: it is not a replacement for clinical judgement or examination of the patient or environment!

Conclusion

Evidence-based searches can be a very useful tool to support clinical decision making in practice. Applying and recording appraisals of scientific literature can give credence to choices of interventions or diagnostic tests and assist in building standard operating procedures.

Assessing the value by measuring the outcome of the result of the search is the most important step: how has this changed your case? Has it made the practice more efficient? How? Can this be shared with others also (Samir, 2004)?

Sharing search summaries, either in practice discussions or in peer-reviewed journals, can help other practitioners to make informed decisions quickly and create relevant, practice-based questions for more focused veterinary research in the future.

KEY POINTS

- Practitioners can use databases of scientific evidence to answer clinical questions relevant to making day to day decisions.

- A clinical question can include a patient group, intervention, comparison (with another intervention or nothing) and an outcome.

- Searches can be input to internet database sources such as Google Scholar, CAB abstracts and PubMed and resulting hits filtered to relevance to the question.

- Scientific papers must be critically appraised in order to form an opinion of reliability.

- A summary of all research can be recorded and shared/reviewed in a peer group and submitted to a wider audience for the full benefit to the profession.

- The outcome (what was changed as a result of using this advice) should also be recorded and shared so that full value can be derived for both practitioner, customer and animal.